As artificial intelligence becomes deeply embedded in daily life, AI governance in 2026 has emerged as a critical topic for businesses, developers, and users worldwide. Governments, companies, and researchers are working together to ensure AI is safe, ethical, transparent, and accountable. This post explains what AI governance means, why it matters, and how global rules are shaping the future of technology.

What Is AI Governance?

AI governance refers to the policies, standards, and practices that guide how AI systems are developed, deployed, and monitored. The goal is to balance innovation with responsibility, ensuring AI benefits society without causing harm.

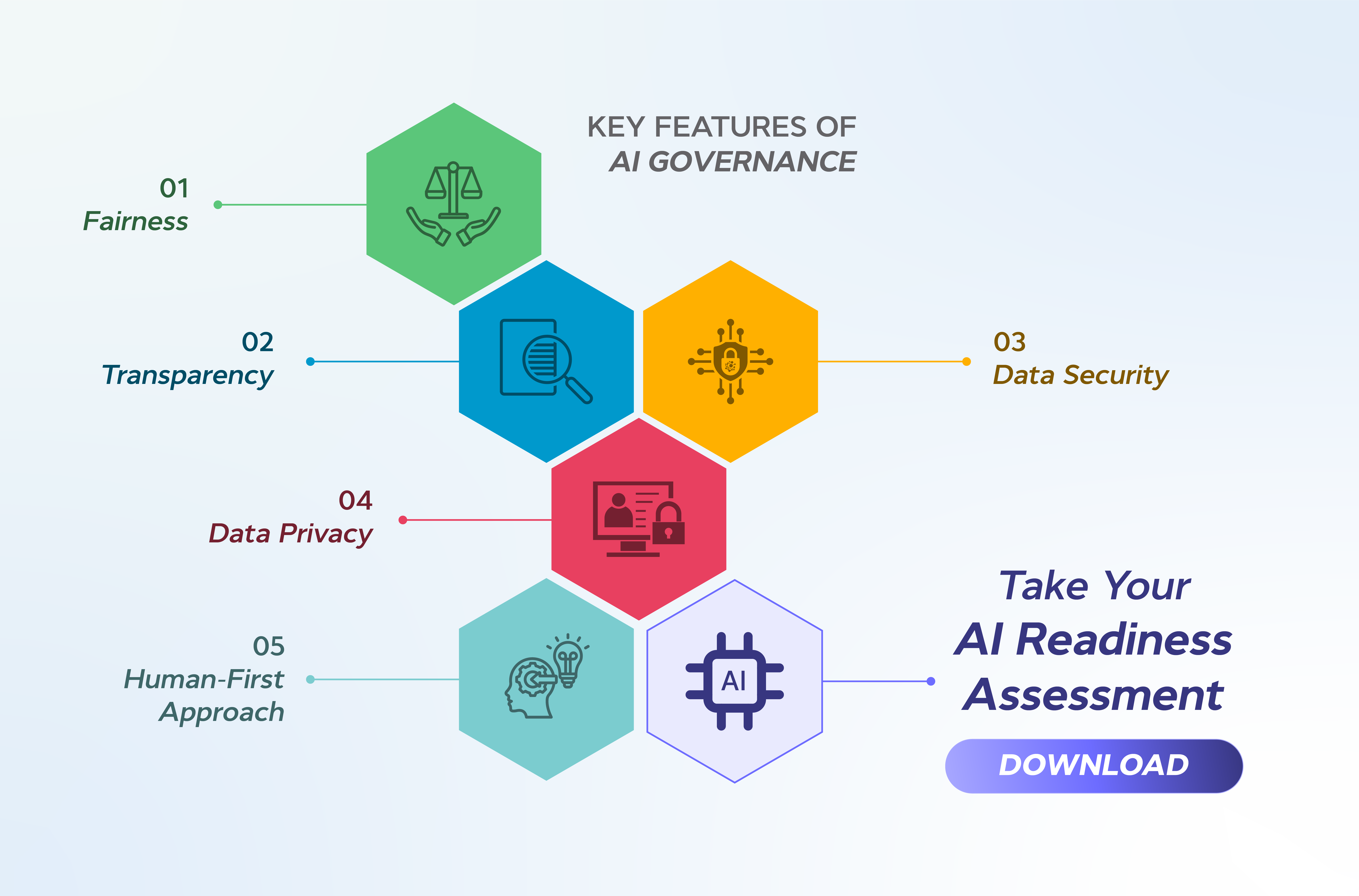

Key areas include:

- Data privacy and protection

- Transparency and explainability

- Bias reduction and fairness

- Security and misuse prevention

- Human oversight and accountability

Why AI Governance Matters More in 2026

By 2026, AI systems are influencing decisions in finance, healthcare, education, hiring, transportation, and content creation. Without proper governance:

- Biased algorithms can lead to unfair outcomes

- Poor data handling can violate privacy laws

- Unregulated AI can spread misinformation or cause security risks

Strong governance builds trust, which is essential for long-term AI adoption.

Global Trends in AI Regulation (2026)

Different regions are taking structured approaches to AI governance, but with shared goals.

Common Global Principles

- Risk-based regulation: Higher-risk AI systems face stricter rules

- Human-in-the-loop: Humans remain responsible for critical decisions

- Auditability: AI systems must be testable and reviewable

- Transparency: Users should know when AI is being used

AI Governance Models Compared

| Aspect | Strict Governance Model | Balanced Governance Model | Light-Touch Model |

|---|---|---|---|

| Innovation Speed | Moderate | High | Very High |

| Risk Control | Very Strong | Strong | Limited |

| Compliance Cost | High | Medium | Low |

| Public Trust | Very High | High | Variable |

| Business Adoption | Slower | Steady | Fast but risky |

Insight: In 2026, most countries are moving toward a balanced governance model, encouraging innovation while controlling risk.

How Businesses Can Prepare for AI Governance

If you use or build AI tools, governance is no longer optional.

Practical Steps:

- Document AI workflows clearly

- Audit training data for bias and quality

- Implement explainable AI where possible

- Set up internal AI ethics guidelines

- Monitor AI outputs continuously

These steps reduce legal risk and improve user confidence.

Role of Developers and Content Creators

AI governance is not just for governments.

- Developers should design responsible-by-default AI systems

- Bloggers and educators should explain AI use clearly and honestly

- Businesses should avoid fake claims and overpromising AI capabilities

AI Governance and the Future of Innovation

Contrary to popular belief, governance does not kill innovation. In 2026, it:

- Encourages better-quality AI products

- Reduces long-term legal and reputational risks

- Creates a level playing field for global competition

Well-governed AI is more likely to scale sustainably across markets.

Final Thoughts

AI governance in 2026 is about building trust at scale. As AI continues to evolve, clear rules and ethical practices will define which technologies succeed globally. Businesses and creators who adapt early will gain a strong competitive advantage.